Lightweight workflow engine with distributed execution

Define workflows in YAML. Execute with a single binary. No database or message broker required. Ideal for VMs, containers, and bare metal.

Demo

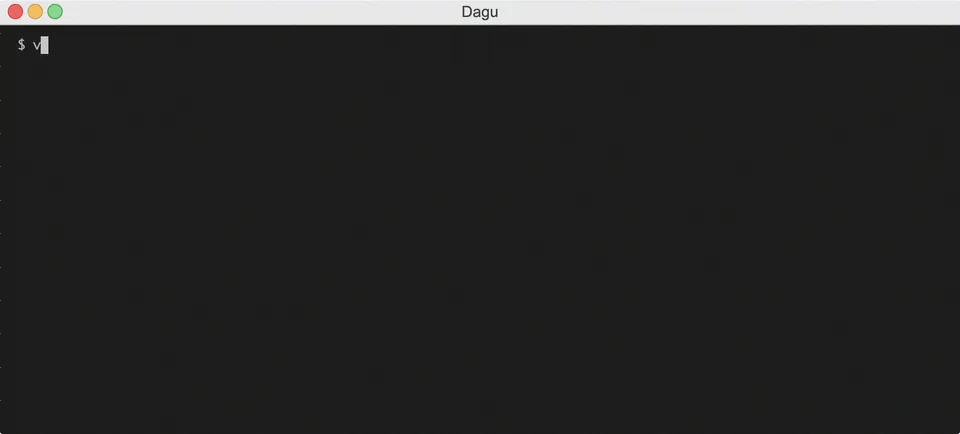

CLI: Execute workflows from the command line.

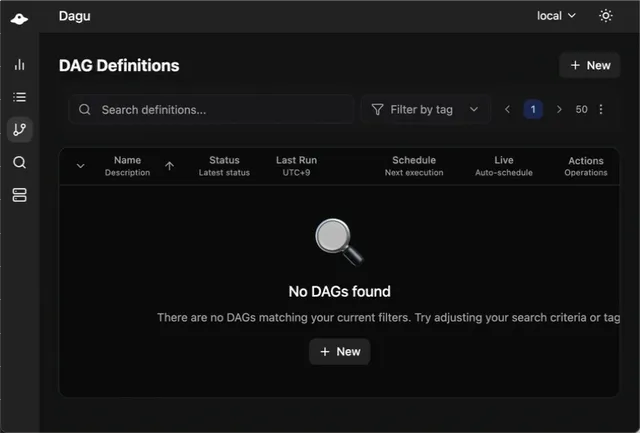

Web UI: Monitor, control, and debug workflows visually.

Why Boltbase?

- Single binary - No database, message broker, or external services. Architecture

- Declarative YAML - Define workflows without code. YAML Reference

- Composable - Nest sub-workflows with parameters. Control Flow

- Distributed - Route tasks to workers via labels. Distributed Execution

- Production-ready - Retries, hooks, metrics, RBAC. Error Handling

Quick Start

Install

bash

curl -L https://raw.githubusercontent.com/dagu-org/dagu/main/scripts/installer.sh | bashpowershell

irm https://raw.githubusercontent.com/dagu-org/dagu/main/scripts/installer.ps1 | iexcmd

curl -fsSL https://raw.githubusercontent.com/dagu-org/dagu/main/scripts/installer.cmd -o installer.cmd && .\installer.cmd && del installer.cmdbash

docker run --rm -v ~/.boltbase:/var/lib/boltbase -p 8080:8080 ghcr.io/dagu-org/boltbase:latest boltbase start-allbash

brew install boltbasebash

npm install -g --ignore-scripts=false @dagu-org/boltbaseCreate a Workflow

bash

cat > hello.yaml << 'EOF'

steps:

- command: echo "Hello from Boltbase!"

- command: echo "Step 2"

EOFRun

bash

boltbase start hello.yamlStart Web UI

bash

boltbase start-allVisit http://localhost:8080

Key Capabilities

| Capability | Description |

|---|---|

| Nested Workflows | Reusable sub-DAGs with full execution lineage tracking |

| Distributed Execution | Label-based worker routing with automatic service discovery |

| Error Handling | Exponential backoff retries, lifecycle hooks, continue-on-failure |

| Step Types | Shell, Docker, SSH, HTTP, JQ, Mail, and more |

| Observability | Live logs, Gantt charts, Prometheus metrics, OpenTelemetry |

| Security | Built-in RBAC with admin, manager, operator, and viewer roles |

Example

A data pipeline with scheduling, parallel execution, sub-workflows, and retry logic:

yaml

schedule: "0 2 * * *"

type: graph

steps:

- name: extract

command: python extract.py --date=${DATE}

output: RAW_DATA

- name: transform

call: transform-workflow

params: "INPUT=${RAW_DATA}"

depends: extract

parallel:

items: [customers, orders, products]

- name: load

command: python load.py

depends: transform

retry_policy:

limit: 3

interval_sec: 10

handler_on:

success:

command: notify.sh "Pipeline succeeded"

failure:

command: alert.sh "Pipeline failed"See Examples for more patterns.

Use Cases

- Data Pipelines - ETL/ELT with complex dependencies and parallel processing

- ML Workflows - GPU/CPU worker routing for training and inference

- Deployment Automation - Multi-environment rollouts with approval gates

- Legacy Migration - Wrap existing scripts without rewriting them

Quick Links: Overview | CLI | Web UI | API | Architecture